The Valence–Arousal Model: A Simple Map to Understand Complex Human Emotions

Human emotions are complex, layered, and constantly shifting. Yet for years, most people and even most technologies tried to understand them through simple labels like happy, sad, or angry. The problem is that real emotions don’t fit neatly into boxes. They blend, overlap, and change intensity from moment to moment. That’s why the valence–arousal model has become one of the most trusted frameworks in psychology and modern emotion AI. It helps explain emotions in a way that feels both intuitive and scientifically sound.

Today, as organizations increasingly adopt emotion recognition software and video emotion recognition tools, this model plays a crucial role in making emotion analysis more accurate and context-aware. Before exploring how tools like Imentiv AI use this model, it helps to understand the basics.

Understanding Valence and Arousal

The valence–arousal model is built on two simple ideas.

- Valence refers to how positive or negative an emotion feels. A joyful laugh has high valence, while the sinking feeling of sadness has low valence.

- Arousal, on the other hand, describes the intensity of that emotion. Excitement is high in arousal; calmness sits at the low end.

How Emotions Fit onto the Grid

Once you visualize emotions along these two axes, patterns become surprisingly clear. Happiness can be a warm, quiet contentment (high valence, low arousal) or an energetic burst of enthusiasm (high valence, high arousal). Anger might show up as simmering irritation or explosive rage—both negative, but very different in intensity.

Even sadness has variations. Someone may feel deeply upset and withdrawn (low valence, low arousal), or anxious and restless when sadness is paired with emotional overwhelm (low valence, high arousal). The model is flexible enough to capture all these nuances, which is why it has become a foundation for modern emotional psychology.

One of the most useful aspects of this grid is how it highlights emotional ambiguity. For example, nervous excitement—like before giving a presentation—sits somewhere between positive and negative valence but is clearly high in arousal. Traditional emotional labels struggle to describe these blended states, but the valence–arousal model handles them easily.

Why This Model Works Better Than Positivity vs Negativity

For a long time, emotions were understood as simply positive or negative. But life doesn’t work that way. Two emotions can both feel “positive” yet be completely different experiences. Calm satisfaction and ecstatic joy are both pleasant; however, they differ greatly in energy. The same goes for negative emotions: fear and boredom are both unpleasant, but one is high arousal, and the other is low.

Because of this, the valence–arousal model avoids oversimplifying emotional signals. It provides a continuous space rather than rigid boundaries, something essential for interpreting real human behavior.

What This Means for Emotion AI and Video Analysis

Modern emotion recognition software has evolved far beyond reading facial expressions. Today, systems must interpret voice tone, micro-expressions, body language, speech content, and overall behavioural context. This is where the valence–arousal model becomes indispensable.

In video emotion recognition , for example, a system might detect:

- a positive emotion with low arousal during a calm, confident introduction

- or a negative emotion with high arousal during a stressful interview question

The model helps AI understand that not all smiles represent joy and not all frowns represent sadness. Emotion lives in a continuum, not a checklist.

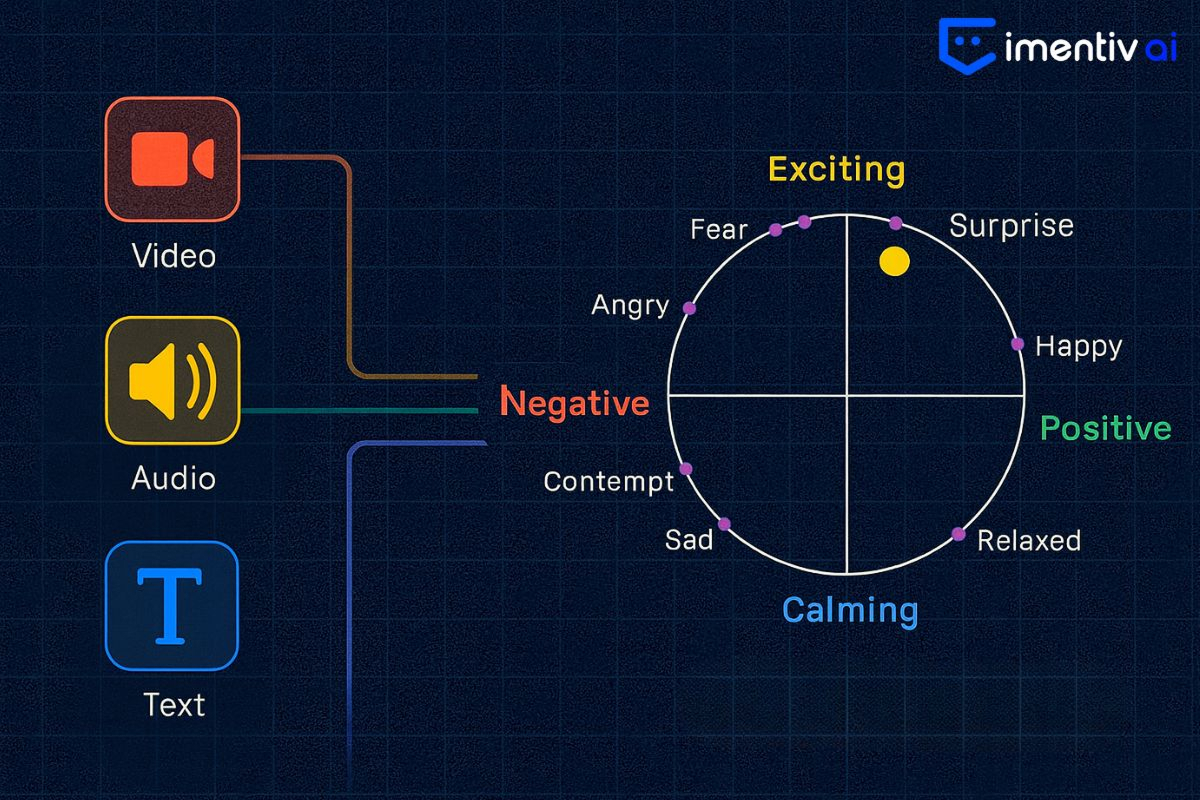

How Imentiv AI Maps Emotions Using the Valence–Arousal Model

Imentiv AI doesn't guess emotions from a single signal. It builds an emotional profile by analyzing multiple modalities at the same time and then converts these signals into valence (pleasantness), arousal (activation level), and intensity (strength of emotional expression) on a continuous scale.

Here’s how the mapping works behind the scenes:

1. Signal Extraction Across Modalities

The system analyzes several types of inputs in parallel:- Facial expressions: micro-movements, action units, muscle tension

- Voice tone: pitch, pace, energy, tremor, loudness

- Speech content: sentiment, word choice, emotional meaning

- Behavioral cues: pauses, hesitations, gestures, gaze behavior

Each signal contributes a different layer of emotional information.

2. Feature Encoding and Normalization

These signals are converted into numerical features — for example:- rising pitch → higher arousal

- slower, softer voice → lower arousal

- positive sentiment words → higher valence

- frown muscle activation → lower valence

Each modality—facial, voice, and text—is processed and normalized independently. This ensures that valence and arousal are calculated within the context of each signal type, allowing emotional patterns to be analyzed clearly and without cross-modal blending.

3. Mapping to Valence and Arousal Coordinates

Each signal predicts its own small shift in valence or arousal. The system aggregates these micro-predictions into two final continuous scores:Valence score: a value representing how positive or negative the emotion is

Arousal score: a value representing how calm or intense the emotion is

These two numbers form the coordinates on the valence–arousal grid. Also, Imentiv AI provides an intensity score, which reflects how strongly the emotion is being expressed at a given moment. This helps distinguish between subtle emotional signals and more pronounced reactions, adding an extra layer of clarity to the analysis.

4. Dynamic Emotional Trajectory

Instead of giving one static emotion label, Imentiv AI generates a moving emotional trajectory. This shows how the person’s emotional state changes easily — from calm to tense, or from neutral to engaged.By grounding its analytics in the valence–arousal model, Imentiv AI offers a more nuanced and context-rich understanding of human emotion, something that traditional emotion frameworks struggle to provide.

Want to see how emotions are mapped beyond labels? Explore how Imentiv AI applies the valence–arousal model to real human interactions.