The Unspoken Language of Leadership: Audio Emotion Recognition in CEO Talks

In corporate leadership, every word uttered by a CEO is carefully watched. But there is often a deeper layer; the emotion carried in their voice, the shifts in tone, the subtle signs that reveal how the person truly feels. Imentiv’s Audio Emotion Analysis, powered by automatic speech emotion recognition using machine learning, helps bring those cues to light, revealing sensitivity, confidence, communication style, and emotional state.

Putting Emotion AI to the Test

In this blog, you’ll see how analyzing the speech patterns of CEOs, CFOs, and other top leaders can highlight subtle emotional signals in their voice, offering fresh insights into leadership style, confidence, and decision-making tone.

Now let’s look at how it performs in a real-world scenario.

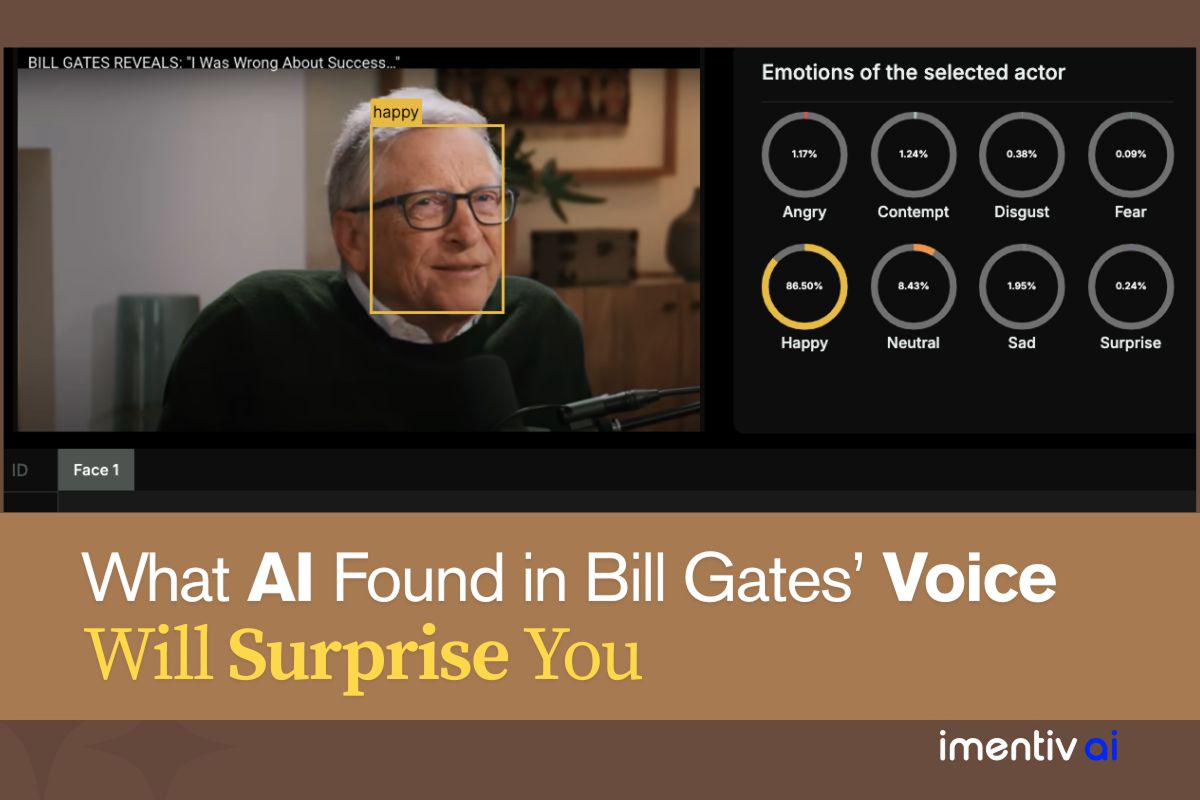

This example features audio from a popular podcast conversation with Bill Gates, where he talks about personal experiences, mindset shifts, and the lessons that shaped his thinking. Using our Audio Emotion AI tool, combined with expert psychological interpretation, we break down how the emotional flow of the conversation is reflected in how he speaks.

This foundation will help you better understand how AI-driven insights and psychological interpretation come together in leadership communication.

Want to explore the full Audio Emotion AI data for this podcast? Click here to open the interactive dashboard.

This is an audio emotion analysis of a 60-minute podcast featuring Bill Gates , where he talks about personal experiences, mindset shifts, and the lessons that shaped his thinking. The analysis reveals a dominant presence of positive affect, with happiness detected in 36.42% of the speech segments. Elevated levels of disgust (16.77%) and fear (14.03%) suggest instances of moral evaluation and emotional vulnerability during the conversation. A neutral emotional tone (16.38%) reflects balanced or objective discourse. Additional emotional states such as surprise (8.12%), sadness (3.92%), anger (3.37%), and boredom (0.98%) were present at lower frequencies, highlighting episodic shifts in tone likely linked to specific topics or reflective moments.

Cognitive Reappraisal & Self-Correction (Disgust, Surprise, Happy)

Gates openly acknowledges he was “wrong” about a past belief related to success. This reflects cognitive reappraisal , a psychological process where one revisits and reinterprets earlier assumptions based on maturity and new experiences.

- The disgust detected is likely self-directed rather than about others. It suggests internal moral reflection, perhaps tied to his earlier, more tech-centric view of success, which he now contrasts with human-centered values like empathy, education, and well-being.

- The happy tone emerging here is emotionally significant; it’s not surface-level joy but deeper, integrated contentment. It signals that Gates isn’t ashamed of the past but rather grateful for the personal growth that came from reevaluating it.

- Surprise points to moments of sudden realization, where Gates recognizes blind spots in his earlier worldview, especially around underappreciated human experiences like grief, inequality, and systemic gaps in education.

Family Systems Theory & Emotional Imprinting

Gates’s reflections on his parents, particularly his father, illustrate how foundational family relationships shape emotional values, discipline, and identity.

- He speaks of his father with a deep sense of reverence, pointing to strong emotional modeling during his upbringing. This aligns with Bowen’s Family Systems Theory , which emphasizes the intergenerational transmission of beliefs, emotional responses, and relational patterns.

- Gates’s narrative underscores how his moral compass and long-term philanthropic motivations may have been emotionally imprinted through this close familial bond.

Existential Grief and the Psychology of Loss (Fear, Sadness)

When Gates discusses the death of close friends and his father, the emotional tone shifts. His voice carries fear and sadness , indicating a confrontation with mortality, impermanence, and the search for meaning .

- This goes beyond personal grief; it evolves into motivational grief , where pain becomes a driving force behind purposeful action, such as global health work or education reform.

- Importantly, Gates’s openness about seeking therapy signals high emotional literacy . It normalizes mental health support for elite performers, especially those dealing with unresolved loss, pressure, or the weight of legacy.

Success Reframed: From External Achievement to Inner Fulfillment

Gates narrates a clear psychological transition from valuing external achievement to prioritizing intrinsic meaning .

- In his early career, success meant building Microsoft, driving innovation, and market dominance.

- In his later years, it’s redefined: success now means nurturing family bonds, promoting educational equity, and contributing to global well-being.

- This aligns with Maslow’s theory of self-transcendence , where individuals seek fulfillment not through personal gain but by serving others and leaving a lasting impact.

Motivation in Education: Relevance and Intrinsic Drive

Gates’s focus on education reflects key principles from Self-Determination Theory , particularly the need for:

- Autonomy – Students must feel a sense of choice and ownership in what they learn.

- Competence – They need to believe they’re capable of mastering the subject.

- Relatedness – Learning should feel relevant and connected to real-world issues.

His advocacy for making learning more meaningful resonates with constructivist models , which argue that students are more engaged when they can connect new knowledge to their own lives. Gates addresses the psychological disengagement that traditional schooling often creates.

Therapy and Emotional Resilience

Gates helps normalize emotional introspection, especially among high-functioning individuals, by speaking about therapy without stigma.

- This shows resilience , not weakness, highlighting how therapy fosters grief integration, self-awareness, and adaptive coping .

- He also sets a social example , encouraging others in leadership positions to embrace emotional support systems as a form of strength, not vulnerability.

Psychological Principle Reflected: Wisdom Integration & Reflective Equilibrium

At the core of this emotional narrative is a sophisticated psychological process: wisdom integration, the ability to bring emotional insight into past experiences and reshape beliefs accordingly.

- Gates continuously revisits and refines his earlier worldviews in light of new emotional and cognitive data.

- This is a classic case of reflective equilibrium , where inner beliefs are balanced and restructured through honest introspection and real-life learning.

Psychological Constructs Referenced

- Cognitive Reappraisal (Emotion Regulation)

- Attachment & Family Systems Theory

- Existential Psychology & Grief

- Self-Determination Theory (Motivation)

- Maslow’s Hierarchy – Self-Transcendence

- Reflective Equilibrium

- Therapeutic Openness & Stigma Reduction

Emotional Maturity and Human-Centric Success

This talk is a compelling example of emotional maturity in leadership. Gates speaks not just with intellect, but from a place of lived experience, grief, and deep personal growth.

- The AI emotion data reinforces this complexity: reflective highs (happiness, surprise) are interwoven with meaningful lows (fear, sadness, disgust), indicating not emotional avoidance, but genuine psychological integration.

- The "happy" emotion detected isn’t simplistic—it reflects a mature happiness that comes from accepting life’s contradictions and choosing purpose, connection, and contribution over ego.

These insights set the stage for understanding how advanced tools like Imentiv’s Audio Emotion Analysis , powered by automatic speech emotion recognition using machine learning , help uncover sensitivity, confidence, communication style, and emotional state in leadership communication. In the following sections, we’ll walk through how our system analyzes vocal data to detect emotional signals hidden in speech, from subtle shifts in tone to changes in pace and intensity, offering a powerful lens into leadership dynamics.

What is Audio Emotion Recognition?

Audio Emotion Recognition (AER), also known as Speech Emotion Recognition (SER), is a field of study that focuses on identifying human emotional states from speech.

This is achieved by analyzing various acoustic features of the voice, such as pitch, tone, rhythm, and speaking rate. Unlike traditional sentiment analysis that relies on textual data, AER delves into the paralinguistic cues that often convey more about a speaker's emotions than the words themselves.

Imentiv Audio Emotion AI processes voice data to interpret the emotional dynamics present in speech. Our system identifies shifts in emotion and patterns that reflect the speaker’s underlying state by analyzing vocal cues, including tone, pace, and intensity. The system also incorporates automatic speaker identification , which distinguishes different voices and assigns emotion profiles accordingly.

The dashboard presents Audio Emotion AI insights in a clear, visual format, showing emotion intensity, transitions, and the overall emotional flow of the audio. Users can actively explore these patterns to assess speaker presence, emotional consistency, and the impact of the voice across different contexts, from interviews and talks to user research and leadership communication.

The Audio Emotions We Analyze

Our approach to Emotion Analysis from Speech focuses on a comprehensive set of fundamental human emotions. Imentiv Audio Emotion Recognition technology specifically identifies and categorizes the following core emotions:

Sad : Characterized by lower pitch, slower tempo, and reduced vocal energy.

Happy : Often associated with higher pitch, faster tempo, and increased vocal energy.

Surprise : Typically marked by sudden changes in pitch and volume, and often a short, sharp intake of breath.

Fear : Can manifest as a higher, trembling voice, increased speech rate, and irregular pitch.

Boredom : Indicated by a monotonous tone, lower pitch, and slower speech rate.

Angry : Characterized by a louder, harsher voice, higher pitch, and faster speech rate.

Disgust : Often expressed through a low, guttural tone and a constricted vocal quality.

Neutral : Represents a baseline emotional state, with stable pitch, tempo, and volume.

Here is what makes the analysis so detailed.

Speaker-by-speaker emotion analysis

One of the key features of Imentiv’s Audio Emotion AI is its ability to analyze emotions speaker by speaker, using a process called speaker diarization . This means the system automatically detects and separates different speakers within an audio recording.

Even in a single-speaker scenario, it identifies and labels that speaker (e.g., as Speaker 1), allowing their emotional state to be tracked across the full timeline. In multi-speaker interactions, such as discussions, interviews, or press briefings, the system distinguishes each voice and assigns emotion data to the correct speaker. This makes it easy to follow how each person’s emotions shift during the conversation and compare their emotional patterns individually. It brings out the emotional nuances in both solo talks and interactive settings.

Rather than offering just an overall emotional summary, our Emotion AI breaks down the audio interaction, assigning specific emotional states to individual voices, whether it's a CEO, analyst, interviewer, or other participant. You can track how each speaker's emotional tone shifts during the conversation, observe responses to particular questions and compare the emotional patterns of different individuals. This level of granularity reveals how each person communicates under pressure and what emotional signals shape their leadership or response style.

Time-segmented emotion data

Another important feature of our Speech Emotion Recognition technology is the time-segmented emotion analysis, which captures how emotions shift throughout the timeline of the audio. Our AI system divides the recording into meaningful intervals and tracks change in emotional intensity, valence, and tone across each segment.

This helps users make sense of the emotional flow in anything from a short speech to a full-length podcast, offering a structured view of how the speaker's emotions behave and change with time.

For example, in a leadership context, a CEO might start with a neutral tone, grow more upbeat while presenting strong results, and then shift into sadness or tension when addressing challenges or sensitive questions.

This kind of temporal mapping adds depth to the analysis, helping listeners recognize emotional patterns and transitions as they occur during different stages of communication.

The Emotion Wheel: Valence, Arousal, and Engagement

Imentiv’s Audio Emotion AI replaces fixed emotion tags with an Emotion Wheel based on the valence–arousal model. This model maps emotions in a two-dimensional space, helping to interpret both emotional intensity and speaker engagement.

Valence reflects how pleasant or unpleasant an emotion is, from negative (like sadness, anger, or fear) to positive (like happiness or surprise).

Arousal reflects the energy level behind an emotion, from low (like boredom or calmness) to high (like anger, fear, or excitement).

This integration allows us to:

- Quantify Emotional Intensity: Understand how strongly an emotion is being expressed.

- Assess Engagement Levels: Determine the level of activation or energy associated with the speaker's emotional state.

For example, while both anger and fear are negative emotions, anger usually shows higher arousal. Likewise, surprise often carries more arousal than happiness, even though both are positive. In the context of leadership communication, such differences reveal how speakers react to pressure, shift tone, or energize their message.

Transcript-Level Emotion Analysis

Another key feature of our Audio Emotion AI is transcript emotion analysis. The system automatically converts audio into text and then links each transcript line to the corresponding speaker and time segment. Each segment is analyzed line by line for emotional tone, using a set of 29 fine-grained emotions.

Unlike the Emotion Wheel used for audio signals, transcript emotion analysis focuses on discrete emotional categories, offering a clearer look at how specific phrases or sentences carry emotional weight. This provides an added layer of context, especially useful for interpreting what was said alongside how it was said.

Ever wondered how understanding emotions in conversations can boost your sales or inform critical decisions in various scenarios? Explore the power of Emotion Recognition API in transcript analysis.

Audio Emotion API Integration for Scalable Use

For teams looking to scale emotion analysis or integrate it into existing workflows, Imentiv’s Audio Emotion AI is also available as an API. This makes it easy to plug the emotion recognition engine into platforms like feedback systems, user testing environments, or enterprise tools. Developers can send audio data and receive structured emotional insights in return, including speaker-specific emotion tags, time-stamped emotion segments, and valence–arousal values. This flexibility allows businesses and researchers to embed emotional intelligence directly into their processes, apps, or products.

Check out the Emotion API documentation here.

"Leadership is not about titles, positions, or flowcharts. It is about one life influencing another." - John C. Maxwell.

Why it Matters: Insights from CEO Talks

Audio Emotion Analysis of a CEO’s talk captures the tone, confidence, and feelings, giving you a clearer sense of what the person truly means. That kind of insight can help investors catch subtle cues, help employees sense authenticity, and help the public feel more connected.

Organizations can make better decisions, strengthen trust, and understand leadership communications by paying attention to these emotional signals.

In essence, Audio Emotion Recognition provides a powerful lens through which to view the unspoken aspects of leadership communication, offering invaluable insights that can drive better decision-making and stronger stakeholder relationships. From Audio Emotion Analysis to Speech Emotion Recognition APIs, these advancements are changing the way we perceive and interpret spoken communication.

Ready to explore voice-driven emotion insights? Start analyzing today

Disclaimer: Imentiv AI is a tool to assist human understanding. All findings are derived from observable cues and are intended to support, not replace, human evaluation or judgment. It does not claim to access or interpret an individual’s inner thoughts or intentions.