How AI Emotion Recognition for Virtual Meetings Improves Engagement

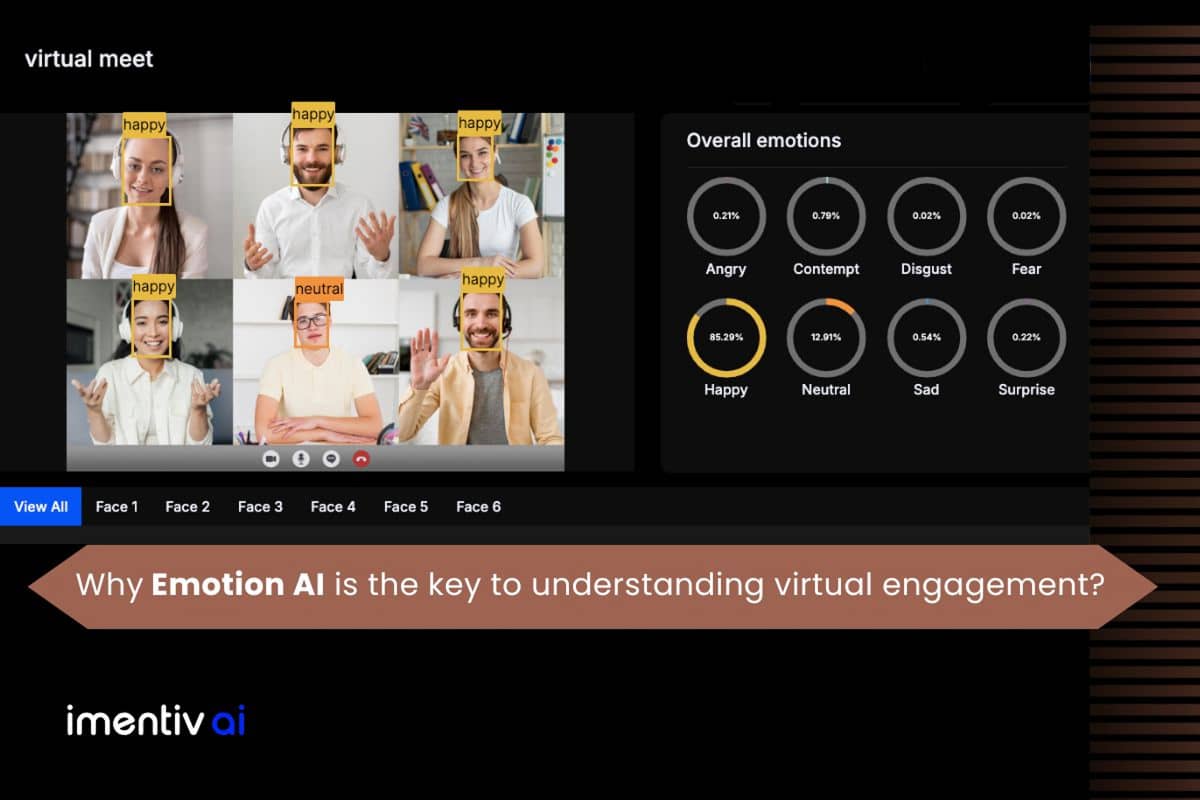

Virtual meetings drive collaboration in today’s remote and hybrid workplaces. Even with technological advances, many organizations still struggle to achieve meeting efficiency and meaningful engagement in online environments. To bridge this gap, our AI-powered video emotion analysis captures subtle emotional cues lost in virtual meetings, using facial expressions, voice tone, and transcript data to help teams reconnect on a deeper level.

“As remote collaboration becomes the norm, AI emotion recognition for virtual meetings is emerging as a crucial tool for decoding how teams feel, connect, and perform behind the screen.”

Our AI uses multimodal emotion recognition technology to reveal participants’ real-time emotional states. This empowers teams with deeper meeting analytics , offering a clearer understanding of how everyone feels, communicates, and engages during collaborative sessions.

The Emotion Gap in Virtual Meetings

Creating a space for authentic participation is essential in virtual meetings, where emotions and behaviors often shape how ideas are shared, received, and built upon.

Even Google’s new 3D conferencing system, Beam , reflects this shift, designed to restore eye contact, facial expressions, and body language so remote conversations feel more natural and connected, almost like being in the same room.

Without the full range of non-verbal cues, like tone of voice, facial expressions, and gestures, participants often struggle to read the emotional atmosphere. This “emotional gap” disrupts the flow of ideas, reduces meeting productivity , and increases the chance of miscommunication.

Research shows that when emotional cues are missing in virtual meetings, people experience mental fatigue from constantly trying to interpret others. It also leads to fewer spontaneous ideas, weaker team connection, and more frequent misunderstandings. The impact isn’t just emotional; companies lose millions each year on meetings where people show up but remain emotionally disengaged.

Our Multimodal AI Emotion Recognition Technology

Our AI Emotion Recognition technology analyzes virtual meetings across three layers, video, audio, and text, to deliver a multidimensional view of emotional engagement.

Imentiv’s Video Emotion Recognition

Imentiv’s Video Emotion AI uses advanced AI algorithms to analyze facial emotions, personality traits, and emotional intensity.

Our Video Emotion AI detects universal expressions such as anger, contempt, disgust, fear, happiness, neutrality, sadness, and surprise. When it comes to subtler cues like confusion, agreement, and engagement, our analysis interprets emotional intensity through the valence-arousal model, helping to uncover affective states, levels of engagement, and emotional nuance with greater precision.

Imentiv’s Audio Emotion Recognition

Imentiv’s Audio Emotion AI processes vocal features, including tone, pitch, rhythm, and vocal intensity, to identify emotional cues like stress, enthusiasm, or fatigue. It detects universal emotions such as sad, happy, surprise, fear, boredom, anger, disgust, and neutral, and interprets emotional intensity through the valence-arousal model, integrated with our emotion wheel framework. This helps you understand affective states and vocal engagement with greater depth and clarity.

Our Speech Emotion Recognition technology also includes speaker recognition and interruption detection, making it a powerful tool for conversation analytics across various audio-based interactions.

Imentiv’s Transcript Emotion Analysis

Imentiv’s Text Emotion AI uses natural language processing (NLP) to evaluate emotional tone, sentiment patterns, assertiveness, withdrawal, and shifts in mood. It analyzes speech transcript sentence by sentence across 29 emotion categories, including admiration, amusement, anger, annoyance, approval, caring, confusion, curiosity, desire, disappointment, disapproval, disgust, embarrassment, excitement, fear, gratitude, grief, joy, love, nervousness, optimism, pride, realization, relaxed, relief, remorse, sadness, and surprise.

Together, these three layers- visual cues, vocal tone, and textual content- create a high-resolution emotional profile of any virtual meeting, allowing you to detect emotional alignment, hidden tensions, and shifts in engagement that single-layer analysis often misses.

Real-World Case Study: GitLab’s Virtual Product Team Meeting

To demonstrate how our Emotion AI technology adds psychological nuance to remote collaboration analysis, we applied it to GitLab’s recorded product team meeting. This virtual session offered a rich landscape of emotional expression, personality dynamics, and subtle cues that often go unnoticed in traditional observation, highlighting the value of analyzing both visible and hidden emotional layers in product team communication.

Emotional Expression in Virtual Settings: A Psychological Lens

Neutral Facial Expressions: Signs of Mental Focus, Not Detachment

The most dominant emotion seen in the visual data was neutrality, appearing in about 54% of the footage. Rather than signaling emotional disconnection, this often reflects deep cognitive engagement. According to Cognitive Load Theory (Sweller, 1988), when individuals are processing complex information or focusing on tasks, they tend to reduce facial expressiveness. In virtual meetings, this is amplified.

Remote settings reduce the rich, in-person cues that support emotional connection, such as micro-expressions or mirroring. This can create an emotional "flattening" effect, where people seem less expressive because they're focused, not disengaged. This observation is consistent with Social Presence Theory (Short, Williams & Christie, 1976), which explains how reduced co-presence in virtual settings can naturally lead to subdued emotions.

Happiness in Virtual Meetings: A Signal of Team Bonding

The second most common emotion was happiness, making up around 28% of expressions. However, this doesn't necessarily point to a cheerful mood. In team dynamics, moments of happiness are often signs of alignment, interpersonal bonding, or collective satisfaction.

In the context of product teams, such expressions may be linked to:

- Recognition of progress or milestone achievements

- Light-hearted moments or shared humor

- Satisfaction from solving a complex issue collaboratively

These reflect what Tuckman’s Group Development Model defines as the "norming" and "performing" phases. It also supports Fredrickson's Broaden-and-Build Theory (2001), which shows that even brief moments of positive emotion enhance creativity, openness to ideas, and team cohesion.

Sadness and Emotional Fatigue: Warning Signs of Virtual Burnout

A noticeable presence of sadness (~12%) surfaced in both facial expressions and voice analysis. This may indicate virtual fatigue, a well-documented phenomenon. According to Jeremy Bailenson (2021), constant self-monitoring, lack of eye contact, and sitting still for extended periods contribute to emotional drain in video calls.

This sadness could also point to:

- Feeling emotionally exhausted or burned out

- Suppressing true feelings due to social pressure

- A sense of helplessness or lack of control if decisions remain unresolved

Such signs are often tied to Learned Helplessness (Seligman, 1975). Without clear direction or psychological safety, people may withdraw emotionally even if they appear engaged on the surface.

Vocal Indicators of Hidden Tension: Disgust and Fear

While faces appeared composed, the audio analysis revealed deeper emotional undercurrents. Two emotions stood out: disgust (20.8%) and fear (14.7%).

Psychologically, disgust in speech often points to:

- Disapproval or quiet disagreement with what's being said

- A sense of internal judgment about decisions or methods

- Emotional resistance or conflict not openly expressed

Meanwhile, fear in the voice may suggest:

- Anxiety about being evaluated or misunderstood

- Stress from looming deadlines or high-stakes product goals

- Discomfort caused by unclear expectations or shifting priorities

These insights align with Lazarus' Cognitive Appraisal Theory, which states that emotions like fear or disgust emerge based on how a person evaluates their control, the stakes involved, and the potential outcome of the situation.

Boredom in Voice: Emotional Disengagement Without Conflict

A smaller but meaningful portion of voice data showed signs of boredom (7.1%) and sadness, which may reflect passive disengagement. This is when individuals are mentally checked out, not angry or upset, but simply unmotivated to contribute.

In virtual meetings, boredom often stems from:

- Repetitive or aimless discussions

- Feeling like one’s input isn’t needed or valued

- A lack of interaction or structured participation

This ties into Self-Determination Theory (Deci & Ryan, 1985), which highlights that when our needs for competence, autonomy, or connection aren’t met, motivation drops and emotional withdrawal begins.

Personality Insights: Team Temperament Behind the Emotions

The analysis also surfaced interesting personality dynamics, inferred from both emotional tone and behavioral cues:

- Moderate Extraversion (0.49): A mix of expressive and more reserved members. In remote meetings, introverted individuals might withdraw even further due to a lack of non-verbal cues.

- Elevated Neuroticism (0.55): Indicates a tendency toward emotional sensitivity, tension, or stress when roles, expectations, or feedback are unclear.

- High Openness (0.59): Suggests a team rich in creativity and flexible thinking. However, these traits need freedom to thrive; rigid formats or repetitive tasks may frustrate such team members.

- Average Agreeableness and Conscientiousness (~0.55): Shows a generally cooperative group with a fair sense of structure and professionalism, though not extreme in either direction.

Emotional Mismatch: What You See vs. What You Hear

One of the most striking patterns was a disconnect between facial expressions and vocal tone. While people visually appeared neutral or even happy, their voices often revealed underlying frustration, anxiety, or disengagement.

This is an example of surface acting (Hochschild, 1983), when people manage their facial expressions to meet social expectations, even if their internal state is different.

In virtual settings, this dissonance is often intensified:

- Negative emotions are suppressed due to fear of judgment

- Delayed reactions or muted cues reduce natural rapport

- Lack of psychological safety prevents open emotional expression

Over time, this disconnect can lead to emotional exhaustion, reduced trust, and lower creativity, critical risks for any product team striving for innovation.

Recommendations: Building Emotionally Intelligent Virtual Teams

To reduce emotional suppression and improve team connection, the following psychological strategies are recommended:

- Start with Emotional Check-ins: Even 1-2 minutes of informal chatter or pulse questions can set a more authentic emotional tone.

- Acknowledge Discomfort: When leaders sense hesitation or stress, naming it out loud (with empathy) helps build trust.

- Vary Participation Methods: Use polls, chat inputs, and clear turn-taking structures to keep all voices heard and reduce boredom.

- Train Managers in Emotional Awareness: Remote empathy skills are crucial. Leaders should learn to spot emotional cues in tone, pauses, and non-responses.

- Offer Post-Meeting Feedback Channels: Give team members an outlet for sharing feelings or ideas that weren’t expressed during the call.

This in-depth emotional and personality analysis of GitLab’s product team meeting reveals a team that appears calm and productive on the surface but is grappling with deeper emotional undercurrents.

While professionalism and creativity are present, subtle signs of stress, sadness, and disengagement suggest that greater attention to emotional dynamics could elevate both morale and innovation. Virtual teams need good tools and emotionally attuned cultures where psychological safety and authentic connection are prioritized.

To explore the Emotion AI analysis of the GitLab product meeting clip, click here .

Get emotional and behavioral insights with Imentiv’s Emotion AI meeting assistant

Integrating emotional intelligence into your meeting workflow is seamless with Imentiv’s Emotion API and detailed emotional analytics.

The process typically involves:

API Integration with Your Workflow

Our Emotion API integrates with your video conferencing tools or post-meeting workflows. It analyzes facial expressions, vocal tone, and spoken content to generate comprehensive emotional data, including personality trait analysis based on video cues (face and voice). This gives a multi-dimensional view of each participant’s communication style, emotional disposition, and cognitive-emotional balance.

Our video emotion recognition technology is also built to integrate directly into your video conferencing systems, making real-time emotion tracking and post-meeting emotional analysis a seamless part of your workflow. Explore our Video Emotion Recognition product →

Visual and Granular Emotion Data Imentiv provides high-resolution data across several emotional dimensions:

- Valence (emotional direction: positive vs. negative)

- Arousal (emotional intensity or energy)

- Emotion Intensity (strength of emotional expression)

This data is delivered by actor (face) , by time segment, or frame-by-frame , whether you're analyzing the full meeting, a specific participant, or a moment of emotional shift. This structure helps you drill down into emotional patterns with precision.

Meeting Highlights

Our system also generates meeting highlights , automated summaries with emotional intensity curves, key timestamps, and behavioral insights. These help leaders review meetings efficiently, capturing what mattered emotionally without scanning through the entire recording.

For example:

- Emotional peaks in laughter, frustration, or excitement

- Moments of group alignment or emotional disconnect

- Speaker-specific emotion summaries

At Imentiv, we have developed an AI Insights feature that lets users interact with and ask questions about the emotional data extracted from processed content, enhancing insights and understanding of emotional dynamics.

Expert Psychological Interpretation

Along with emotion data, Imentiv AI provides psychological interpretations from our expert team. This feature adds critical context to emotional signals, grounding them in psychological theory and helping users make sense of subtle cues that might otherwise be overlooked.

Especially valuable for in-depth video reviews, these expert insights are available upon request.

Bridging the Emotional Gap with Precision

Behind every task update and product decision in a virtual room, emotions shape outcomes. Imentiv’s multimodal Emotion AI doesn’t just label emotions. It provides a real-time framework to interpret, respond to, and learn from them.

For product teams, managers, or anyone leading remote workforces, this kind of insight creates space to respond, not just react. With AI tools that decode emotional nuance, teams can create meetings that feel seen, heard, and emotionally understood. That’s how real alignment starts.

The Science Behind Emotion AI

Our emotion recognition technology is rooted in decades of research in affective computing and psychology. The foundational work of Paul Ekman on universal facial expressions, particularly through the development of the Facial Action Coding System (FACS) and the identification of Action Units (AUs), provides the theoretical framework for emotion recognition.

The system interprets facial expressions, vocal tone, and language to uncover emotional signals across multiple communication channels. For language and text-based emotion analysis, we reference the Circumplex Model of Affect, which maps emotional states along dimensions of valence and arousal to capture subtle emotional nuance in written communication.

Each modality is processed to reveal emotion patterns that enhance understanding of human behavior in context.

Importantly, our approach addresses the ethical considerations inherent in emotion analysis:

- All data processing follows strict privacy protocols

- Participants maintain control over their information

- Analysis focuses on patterns rather than individual judgments

- The system is designed to augment human understanding, not replace it

This results in a scientifically grounded approach to emotional intelligence. It respects individual privacy while delivering meaningful and actionable insights.

This blog presents conceptual applications of emotion recognition technology in professional settings. Implementation should always prioritize user consent, data privacy, and ethical considerations.